Positron AI has closed an oversubscribed Series A round, raising $51.6 million to fuel the rollout of its inference-optimized Atlas accelerator hardware. With early production deployments at companies like Cloudflare and SnapServe, the Reno-based startup is building momentum as a high-efficiency challenger to AMD and Nvidia in AI inference computing. As AI infrastructure spending is projected to exceed $320 billion in 2025, Positron’s memory-centric, U.S.-manufactured chips signal a shift toward leaner, power-sipping alternatives for generative AI serving at scale.

Recent Funding Round Highlights

- Total 2025 Funding: With this $51.6 million raise, Positron has now amassed over $75 million in funding this year alone.

- Leading Investors: The round was led by Valor Equity Partners along with Atreides Management and DFJ Growth, with additional investors including Flume Ventures, Resilience Reserve, 1517 Fund, and Unless.

- Use of Proceeds: The new capital will support wider deployment of Atlas, Positron’s first-generation AI hardware, and fund the rollout of its second-generation “Asimov” chips planned for 2026.

Targeting AI Inference Efficiency

AI inference, or the process of running trained models to generate results, has become a costly bottleneck for companies deploying large-scale AI applications. As enterprise demand surges, organizations are increasingly constrained by rising costs, energy limitations, and ongoing GPU shortages.

Positron is setting itself as a challenger to NVIDIA’s dominance by offering specialized, energy-efficient chips tailored for inference workloads. The startup claims its approach can significantly improve efficiency. For example, its current Atlas system delivers 3.5x better performance/dollar and up to 66% lower power consumption compared to NVIDIA’s flagship H100 and H200 GPUs.

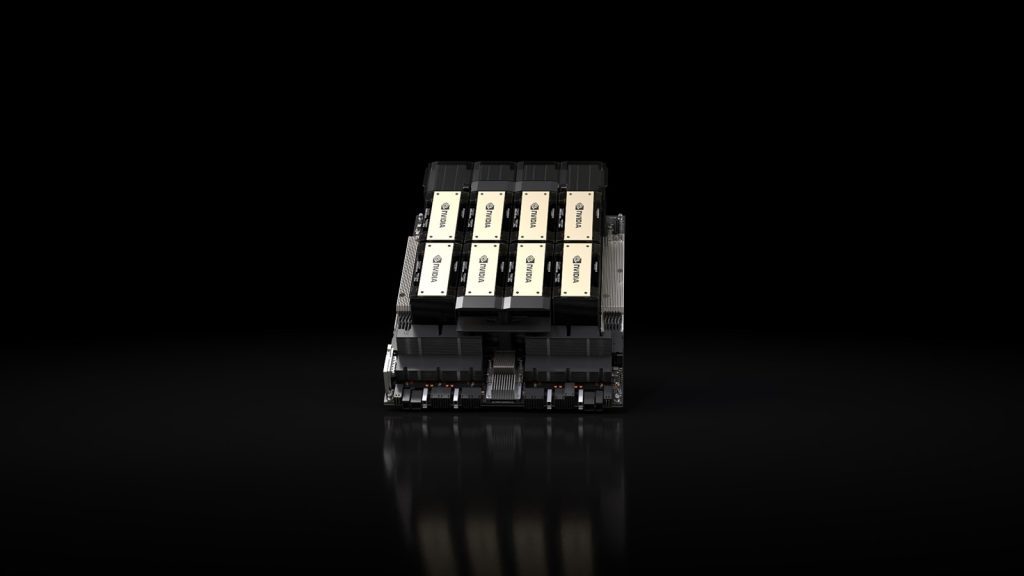

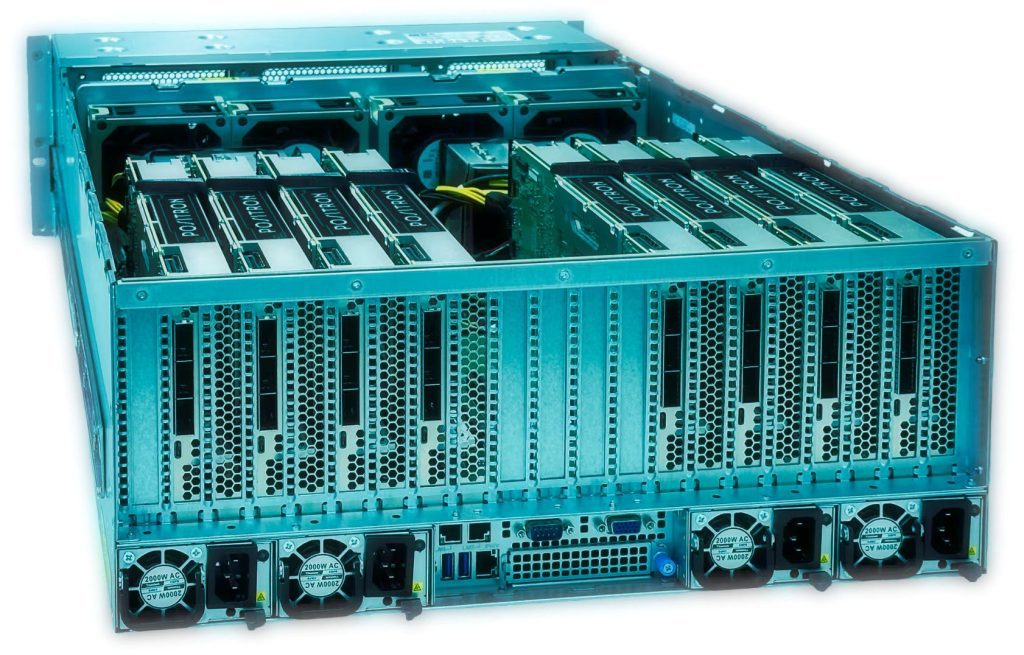

Atlas is built solely to accelerate generative AI applications, which allows for optimizations that general-purpose GPUs can’t match. Its design centers on memory efficiency and ease of deployment. It uses an FPGA-based architecture that achieves over 90% memory bandwidth utilization, far above the 10–30% typical in GPU-based systems. This means Atlas can keep data moving swiftly inside the chip, which is critical for large AI models.

In practical terms, a single Atlas-powered server (about a 2 kW system) can host models with up to half a trillion parameters without needing a cluster of GPUs. Atlas is also compatible with popular AI frameworks as it supports Hugging Face transformer models and even offers an OpenAI API-compatible interface for serving requests.

More importantly, deploying Positron’s hardware doesn’t require exotic cooling or special infrastructure tweaks.

“We build chips that can be deployed in hundreds of existing data centers because they don’t require liquid cooling or extreme power densities,” says Mitesh Agrawal, CEO of Positron AI.

This makes Positron’s accelerators a drop-in option for companies looking to scale AI services without overhauling their data center setups.

Early Customers and Industry Context

Although Positron AI is a young company, it already has real-world traction. Cloudflare, the well-known web infrastructure and security provider, is testing Positron’s Atlas cards across its globally distributed, power-constrained data centers. In a July report, The Wall Street Journal noted that Cloudflare was evaluating non-Nvidia, non-AMD AI hardware for inference workloads. Atlas has since been confirmed as one of the contenders undergoing active trials.

Another early adopter is Parasail, a unique AI Deployment Network. It uses Positron’s hardware to power heavy inference workloads through its AI-native data platform, SnapServe. The two companies partnered to co-develop SnapServe, enabling customers to run 3B and 8B parameter language models with fast, private, always-on access for just $30 to $60 per month.

Beyond these named customers, Positron reports interest and deployments across sectors where efficient AI inference is critical, such as networking, online gaming, content moderation, content delivery networks (CDNs), and even emerging “Token-as-a-Service” providers.

Another pocket of surging inference demand comes from subscription-based AI sexting chat services that generate real-time, highly personalized dialogue and imagery for thousands of simultaneous conversations. These platforms are more about shaving pennies off each generated token while staying under strict power caps in leased racks. A memory-centric card like Atlas, able to keep half-trillion-parameter models resident without exotic cooling, translates directly into lower cost per chat session and fewer throttled responses during traffic spikes. That business case mirrors Cloudflare’s with predictable latency, tighter power budgets, and quick drop-in deployment. These are all areas where large AI models need to run continuously and cost-effectively to serve end users. As a result, shaving off power usage or latency can translate into big savings and better performance.

As for the broader AI hardware market, while NVIDIA’s GPU chips currently dominate AI training and inference in data centers, their high costs and supply constraints have opened opportunities for specialized alternatives. Positron is one of several startups chasing this opportunity, but not all have thrived so far. A rival inference chip startup, Groq, recently slashed its projected 2025 revenue from over $2 billion down to $500 million.

Still, Positron’s strategy of targeting the inference bottleneck (rather than going head-on against NVIDIA in training) and proving its tech with real customers early has instilled confidence in investors.

“Improving the cost and energy efficiency of AI inference is where the greatest market opportunity lies, and this is where Positron is focused,” says Randy Glein of DFJ Growth.

Fast Development and “Made-in-USA” Design

Positron’s rapid progress has been notable in an industry where hardware development often takes years. The company was founded in 2023 by CTO Thomas Sohmers and Chief Scientist Edward Kmett, and it brought Atlas from concept to shipping product in roughly 15–18 months with only about $12.5 million in seed funding.

This unusually fast execution was helped by the team’s deep expertise. Sohmers previously worked on AI chips at Groq, and CEO Mitesh Agrawal was COO at Lambda (an AI cloud provider) before joining Positron to lead its commercialization.

In addition to its engineering speed, Positron also takes pride in its Made-in-America approach. Its first-gen Atlas cards are built using chips fabricated in the U.S. (using Intel’s foundry services) and assembled domestically, which helps with national initiatives to rebuild domestic semiconductor capacity, supply chain reliability, and sidesteps some geopolitical risks.

Next-Generation Plans with Titan

With fresh funding in hand, Positron is already gearing up for its next-gen hardware. Codenamed Titan, the upcoming platform will be built around Positron’s custom “Asimov” silicon and is slated to launch in 2026.

Titan is conceptualized to push the boundaries of AI inference even further. Each accelerator card will feature up to 2 terabytes of high-speed memory that will enable a single Titan-powered system to run models as large as 16 trillion parameters and handle vastly longer context lengths. This kind of capacity is aimed at future frontier AI models that demand enormous memory and throughput.

Notably, Titan will continue Positron’s focus on practicality and efficiency. It’s engineered to fit into standard data center racks and use air cooling to avoid the liquid-cooled setups that some of the latest Nvidia and AMD GPUs require. Sticking with conventional form factors and cooling, Positron hopes to make adoption of Titan as straightforward as plugging in a typical server, which is a selling point for enterprises hesitant to invest in exotic infrastructure.

The AI boom has shifted emphasis from just training ever-larger models to also serving those models to users efficiently. Positron is betting that its memory-centric architecture is the right answer to this shift.

“A key differentiator is our ability to run frontier AI models with better efficiency—achieving 2x to 5x performance per watt and dollar compared to Nvidia,” says Thomas Sohmers, Co-founder and CTO of Positron AI.

Ultimately, the success of Positron’s approach will be measured by whether it can help companies deploy advanced AI applications at scale without breaking the bank on power and hardware. With strong investor backing and early customer wins, Positron AI has the potential to become a serious contender in the AI chip space, turning the industry’s insatiable demand for AI inference into a catalyst for its own growth.